The 2024 Nobel Prize in Physics has been awarded to scientists John Hopfield and Geoffrey Hinton “for foundational discoveries and innovations enabling gadget finding out with synthetic neural networks.”

Impressed via notions from physics and biology, Hopfield and Hinton advanced laptop techniques in a position to memorizing and finding out from patterns in information. Even though they by no means collaborated immediately, they constructed on each and every different’s paintings to expand the principles for the present upward push of gadget finding out and synthetic intelligence (AI).

What are neural networks?

Synthetic neural networks are in the back of a lot of the AI generation we use nowadays.

In the similar method that our mind has neural cells related via synapses, synthetic neural networks have virtual neurons attached in more than a few configurations. Each and every person neuron does not do a lot. The magic lies within the development and power of the connections between them.

Neurons in a synthetic neural community are “activated” via enter alerts. Those activations are cascaded from one neuron to any other in order that they may be able to turn out to be and procedure the enter knowledge. In consequence, the community can perform computational duties equivalent to classification, prediction and choice making.

Lots of the historical past of gadget finding out has been about discovering an increasing number of subtle tactics to shape and replace those connections between synthetic neurons.

Whilst the theory of linking techniques of nodes to retailer and procedure knowledge comes from biology, the maths used to shape and replace those hyperlinks comes from physics.

Networks that take into accout

John Hopfield (born 1933) is an American theoretical physicist who made vital contributions all over his profession to the sphere of organic physics. Then again, the Nobel Prize in Physics used to be awarded to him for his paintings within the construction of Hopfield networks in 1982.

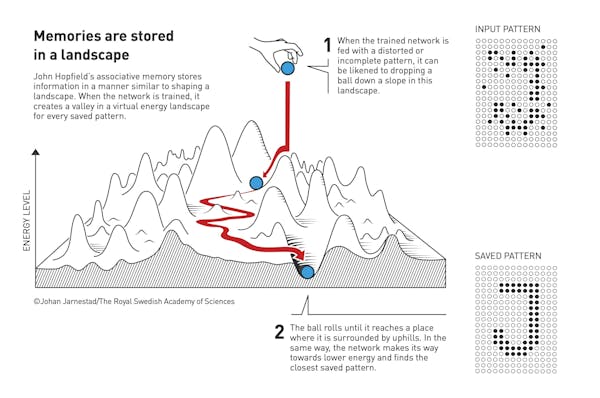

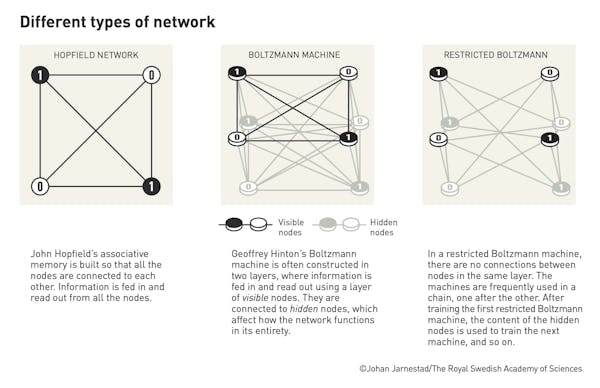

Hopfield networks had been probably the most first kinds of synthetic neural networks. Impressed via rules of neurobiology and molecular physics, those techniques demonstrated for the primary time how a pc may use a “community” of nodes to bear in mind and retrieve knowledge.

The networks advanced via Hopfield may memorize information (equivalent to a selection of black and white pictures). Those pictures might be “remembered” via affiliation when the community used to be requested for the same symbol.

Even though of restricted sensible use, Hopfield networks demonstrated that these kind of synthetic neural networks may retailer and retrieve information in novel tactics. They laid the basis for Hinton’s later works.

Machines that may be told

Geoff Hinton (born 1947), occasionally regarded as probably the most godfathers of AI, is a British-Canadian laptop scientist who has made various vital contributions to the sphere. In 2018, along side Yoshua Bengio and Yann LeCun, he used to be awarded the Turing Award (the easiest honor in laptop science) for his efforts to advance gadget finding out normally and, particularly, a department of it referred to as gadget finding out. deep.

The Nobel Prize in Physics, on the other hand, is awarded particularly for his paintings with Terrence Sejnowski and different colleagues in 1984, creating Boltzmann machines.

It’s an extension of the Hopfield community that demonstrated the theory of gadget finding out: a gadget that permits a pc to be informed no longer from a programmer, however from examples of information. Drawing on concepts from power dynamics from statistical physics, Hinton demonstrated how this primary generative laptop type may be told to retailer information over the years if proven examples of items to bear in mind.

The Boltzmann gadget, just like the Hopfield lattice, had no rapid sensible programs. Then again, a changed shape (referred to as the limited Boltzmann gadget) proved helpful in some carried out issues.

Extra vital used to be the conceptual advance that a synthetic neural community may be told from information. Hinton persevered to expand this concept. He later revealed influential papers on backpropagation (the training procedure utilized in trendy gadget finding out techniques) and convolutional neural networks (the primary form of neural community used nowadays for AI techniques operating with symbol and video information). .

Why this award now?

Hopfield networks and Boltzmann machines appear small in comparison to the present prowess of AI. Hopfield’s community contained best 30 neurons (he attempted to make one with 100 nodes, but it surely used to be an excessive amount of for the computing assets of the time), whilst trendy techniques like ChatGPT will have tens of millions. Then again, the Nobel Prize emphasizes how vital those early contributions to the sphere had been.

Even though the new fast growth of AI – which maximum people know from generative AI techniques like ChatGPT – would possibly look like a vindication of early proponents of neural networks, Hinton has no less than expressed worry. In 2023, after leaving his decade-long place in Google’s AI department, he mentioned he used to be scared via the tempo of construction and joined the rising crowd of voices calling for extra proactive law of AI.

After receiving the Nobel Prize, Hinton confident that AI will probably be “just like the Commercial Revolution, however as a substitute of our bodily functions, it’ll surpass our highbrow functions.” He additionally famous that he nonetheless worries that the effects of his paintings might be “techniques smarter than us that finally end up taking regulate.”

This text used to be in the beginning revealed on The Dialog.